The COPKIT project has developed data-driven policing technologies to support LEAs in analysing, investigating, mitigating and preventing the use of new information and communication technologies by organized crime and terrorist groups. In this blog, KEMEA illustrates the methodology that was adopted for planning, demonstrating and testing the different tools and components developed within the project.

Over the course of the project, a series of demonstrations (demos) of the developed tools took place, the aim of which was to illustrate the different features and functionalities to the consortium in general, but to the Law Enforcement Agencies (LEAs) and members of the advisory boards in particular, in order to obtain feedback for the further development of the tools.

DEMOS GUIDANCE METHODOLOGY (DGM)

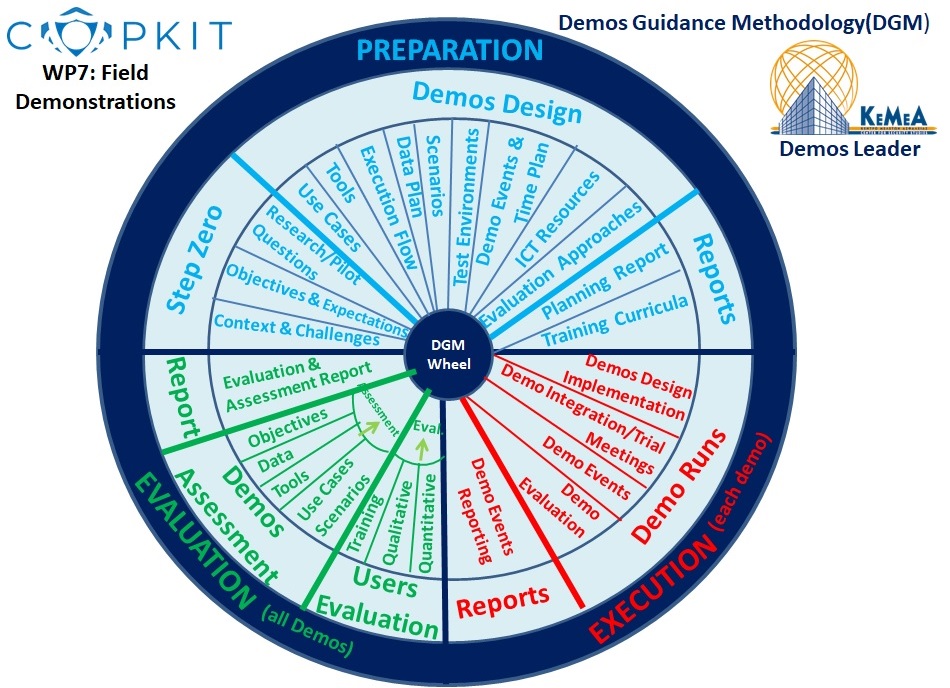

The demonstration preparation and the plan for validating the COPKIT tools was guided by the Demos Guidance Methodology (DGM), a new methodology introduced by KEMEA, the leader of the field tests and demonstration and validation of the COPKIT system. The methodology was adapted from the Trials Guidance Methodology (TGM), which was designed for crisis management and used in the Driver+ EU project.

The figure below shows the DGM wheel as an outcome after the transformation, enrichment, and customisation of the TGM, and how it was tailored to the COPKIT demonstrations’ needs and requirements.

The DGM consists of three main phases: Preparation, Execution and Evaluation, with various steps in each phase. Each phase ends with the submission of one or more reports about the activities.

Overview of COPKIT Demos Guidance Methodology (DGM)

Preparation – Planning Phase

During the Preparation-Planning phase, partners involved in the development of the tools, recorded an overview of the current context, the stakeholders involved, the existing challenges, the tool capabilities, and the gaps to be filled, in order to set the scope, the goals and the expectations for filling those gaps and enabling the COPKIT solution to provide differentiation and added value to the current strategic, tactical, and operational environments of the LEAs.

The first step of the Demos’ Design involved choosing the most appropriate use cases to tailor the development of the tools in COPKIT. Firearms Trafficking (FT) and Crime as a Service (CaaS) were the 2 use cases proposed and selected by the LEAs to test and validate the technology.

As a next step, the partners prepared a list of the technical components and the functions that were to be tested, describing for each component the different potential functionalities that would be available in each demo and associated each component, according to its functionality, to COPKIT’s six Early Warning/Early Action ecosystem phases (collection, extraction, discovery, enrichment, assessment, and forecasting).

Based on the selected use cases and the functionality of each selected component in the previous steps, the partners were then able to list the input/output data handled by each component and the datasets to be collected from various data sources and used during the demos. Through this procedure, they were able to determine a pool of data used in the project, in order to be able to address early on potential data protection, ethical, legal and privacy issues arising from the development of the tools.

Subsequently, the appropriate scripts/scenarios were prepared to be tested via exercises by the LEAs during the demos, following the execution flow of the technical components in the different ecosystem phases of COPKIT. Various test environments such as the Lab tests for the proof of concept, the Secure Test Lab (STL), the VTE (Validation Training Experimentation) and the COPLAB environments, as well as the ICT Infrastructure and resources were defined and set up to run the test phases securely and in a scheduled time plan which was prepared for the demo events which would take place in different physical or virtual locations.

The development of training curricula and the evaluation approaches and tools which would be used for the assessment of COPKIT after the Demo events, were the two last steps of the Demos’ Design phase.

Execution Phase

This phase includes the actual implementation of all the steps planned in the Demos’ Design preparation phase for each trial, private or public demo run.

The technical partners, end user partners and the ethical/legal team members met to test the components and data under the integrated environment to execute trial-outs or rehearsal types of tests prior to the actual final demo events.

Once this had taken place, the partners convened for three private and one public demonstration events and 32 individual one-to-one private test sessions between technical partners and the LEAs, in order for the LEAs to test the components, evaluate them, and provide their quantitative and qualitative feedback, using the evaluation approaches and tools designed in the preparation phase.

This approach allowed the technical partners to incorporate domain knowledge in the tools and provide an improved performance compared to the available state-of-the-art technologies.

Evaluation Phase

All the components and the tools presented during the demos were evaluated by the LEAs, both quantitatively and qualitatively.

LEAs were given score-based questionnaires to quantitatively evaluate the tools during each of the demo runs, as well as individual surveys, preparatory material, exercises, training tools, and curricula which were used by the participants before and during the demos’ execution phases, enabling them to evaluate the tools qualitatively as well.

Following this evaluation, the technical partners were able to have a broader picture of the end-users’ overall evaluation of all demos based on the feedback collected individually through interviews, text-based questionnaires and comments provided during the execution phase of each demo run.

The final phase of this methodology involves the overall assessment of the demos and the project, including the demo scenarios and the corresponding exercises used in each of the use cases and how these have been handled and covered by the COPKIT solution. The users’ feedback and assessment of each component for its contribution to all demos and to the results, as well as their credibility based on technical and performance factors, will provide the COPKIT’s overall TRL Assessment.

Additionally, the assessment of sources and datasets collected, processed, and stored, as well as their potential impacts, allow the partners to adequately address data protection, legal, ethical, and societal issues.

CONCLUSION

The above methodology can be used as a basis for the design of any pilot or demo of any EU Research project and it has already been applied by KEMEA in many EU projects (TRESSPASS, ROXANNE, WELCOME etc.) with the appropriate variations and adjustments in order to fit each project’s needs and requirements.

For more information and updates follow us on Twitter, LinkedIn and Facebook and feel free to contact our team at copkit@copkit.eu.